Bayer pattern is a common term you will come across while working with a digital camera. Wikipedia does a great job at explaining the Bayer pattern:

wiki

Bayer Pattern:

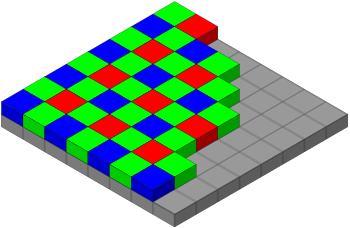

The most straightforward explanation is you have a CCD with three types of photo sensors(red, green and blue). Green are sensitive to luminance and red and blue are sensitive to chrominance(color). Apparently, human eyes are more sensitive to luminance so in a typical CCD you will have twice as many green sensors as the red and blue ones. These photo sensors are arranged in a specific pattern(RGBG, GRBG, RGGB). The raw output(also referred to bayer pattern image) will record only one of the three colors at each photo sensor. Each photo sensor has a corresponding pixel in the image. From the bayer pattern we only have the raw value at each pixel but we want to know the true color(r,g,b). This process of computing the color at each pixel is called demosaicing(or debayering?). A typical arrangement of photo sensors is shown in the image below ( linked from Wikipedia ).

Demoasicing:

There are number of methods out there that do demosaicing and a survey of all the techniques is out of scope of this post. I myself implemented three techniques which are intuitive, computationally less expensive and work reasonably well.

Normal:

This is the first thought that will come to anybody's mind:

For each pixel do bilinear interpolation. So depending on where you are in the pattern the bilinear interpolation will vary. For example in the above image if you are on the green with two reds on top and bottom, you will just take the average of the red value. But, if you are on the blue you will take average of all four neighboring reds etc. This approach works reasonably well but artifacts (aliasing, zippering, purple fringing etc.) show up on the edges. These are quite noticable.

Edge Aware:

Since most of the artifacts are on/near the edges, instead of blindly doing bilinear interpolation you do edge aware interpolation. For example below if you are trying to find the green value at position B, you will only take the average of the green values with smaller gradient. i.e. first you compute the difference of horizontal green values and vertical green values and pick the option with lower difference and take the average of those two green values. This change makes the demosaicing much better.

grgrg

bgBgb

grgrg

Edge Aware Weighted:

Taking it a step further instead of blindly discarding the two green values with higher gradient we take a weigh the horizontal and vertical ones according to the gradient values. Lets define the following:

HDiff = LeftGreen - RightGreen

HSum = LeftGreen + RightGreen

VDiff = TopGreen - BottomGreen

VSum = TopGreen + BottomGreen

Final = 0.5 * (VSum * HDiff + HSum*VDiff ) / (HDiff + VDiff)

I will leave it to your intuition why 0.5 is needed :-) This small extension is less prone to noise and also keeps strong edges intact.

This drastically improves results. There might be even more fancier ways but for now I fixed my issue so I will research further in future! For comparison purposes I am including the sample images after using the normal(left) and edge aware weighted(right) . Observe across the edges on the chair, board and any other place. You can judge the difference yourself..